Welcome to the third and last part of the bread journey! In parts I and II, I wrote about how I scraped data from The Fresh Loaf to get recipes, explored and visualized the text data and used topic modeling to see what trends exist. In this post, I will describe how I trained two different language generation models to predict AI-based recipes for sourdough bread.

Markov-chain language models

After all the above ways to explore the data, I was itching to build a model that could predict new recipes for me to try. I decided to start with something more simple before jumping to the world of neural network and Natural Language Processing (NLP) acronyms (RNN, LSTM, ELMo, BERT, etc), so I started with a Markov chain text generator. Specifically, I used the Markovify package, a bigram-based model that predicts the next word based on the current word.

recipes = df_recipe['Recipe'].tolist()

text_model = markovify.NewlineText(recipes, state_size=2)

for idx in range(2):

print(idx, text_model.make_sentence())Code language: Python (python)The results change every time the code is executed, because the make_sentence() function from Markovify generates sentences with a different starting word. The “recipes” are usually non-sensical, often hilarious. They have varying lengths and are very English-adjacent. I’m showing some of them here:

Well after thinking about this flour as it has been a while since I last baked a ciabatta in all a successful venture considering the accidental changes I made. This was a medical nuclear physicist, at the ingredients and noticed that the bread with potatoes in it. Still soft and moist inside, probably due to the pool. A guy across the street has an Air B&B right on the floured kitchen towel…and yes I did some damage during lamination. What do you do with forumulas. One think I had to snap it up! I am going to bring your favorite bread then suit yourself. will will have to say you that we have two brightly coloured loaves: one yellow and one with all purpose flour 19 g sugar 5 g salt 250 g 100% hydration Wholewheat levain I got amazing crumb!

I had an excess of sweet potatoes are 70% hydration. That really helped in making sure I should be surprised but bulk fermentation and 5 oz. whole wheat. I chose an iteration of this post can be really good bread! So with that new Wustof bred knife that come highly recommended.

I wanted to see if there could be some improvement in the Markovify results and I came across this post describing generating many sentences and calculating their scores to get the best predictions. However, the resulting “recipes” were still as non-sensical, with the model identifying key phrases used in bread baking, but stringing them together in a way that from start to finish, it sounds like an alien trying to learn how to cook on Earth.

Sourdough Pizza with balsamic olive oil was coating the dough yellow or not? Bake hot & fast or cooler and more slowly with a good crust and can hold the weight of the best quality as it was worth the wait. Crumb is chewy, moist and fragrant, with a good bake for these buns. Therefore these are browning evenly every time now with zero muffin top / bottom. Will follow up with a soaker. Hard wheat, rye, spelt, and kamut comprised the whole thing that I now know what needs to be able to buy your old dad some more fittings and a good replica of the loaf pan. Pulla is a new game for me. And after the ghee and 3 green cardamoms over medium high heat, until mostly dry and caramelized. I got it!

Recipe prediction with neural networks

Knowing what simpler algorithms, I decided to plunge into using neural networks to see if I could improve on these results. After taking Andrew Ng’s Coursera class on Neural Networks and researching on how to tackle Natural Language Generation tasks, it seemed like an approach with memory-based neural networks was the right way forward. Based on my work at Gyant as well as a linguistics class during my undergrad, context and history is very important; just a unigram or bigram based model would not be able to produce meaningful new sentences or compare the similarity between them. Long Short Term Memory (LSTM) networks, a flavor of Recurrent Neural Network (RNN), seem especially designed to this task, as their architecture is built to take history and the previous state of the system into account. Also they reminded me of simulations I ran during my PhD and how molecules also retain information over time, and how it is important to wait for this memory to decay to collect uncorrelated statistics. For NLP, my aim is to do the opposite and so, I built a word-level language model using LSTMs to model bread recipes.

I chose not to make a character-level language model as it would take longer to train and there was no guarantee of legible words after a limited number of training epochs. After looking into Google Colab, I chose to train the model on my Macbook Air as I would not run into time and computational limits. Since my goal was to get a recipe to make real bread with, I did not experiment much with the model hyperparameters and architecture, sticking to a modest size and train time to obtain results that I could actually work with. The aim was not high accuracy, but real-life usability.

I used these two posts to educate me about how to set up and train a word-level LSTM in Keras using the TensorFlow backend. I split each tokenized recipe into sequences of length 21, with the final word acting as the prediction for the first 20. Since the average recipe length is ~293 words, 20 words (~7% of an average recipe) seemed like a good way to retain information into the sequences and increase the training examples from 1257 to something a neural network would be happy with. I used an embedding size equal to the sequence length, two LSTMs with 50 nodes, a dense connecting layer and an output layer to predict the probability of a word being the next word after a given sequence. I trained the neural network for 100 epochs with a mini-batch size of 128. All these parameters can be adjusted and if I had access to a GPU, I would love to play with these quantities.

tokenizer = Tokenizer()

tokenizer.fit_on_texts(token_sequences)

num_sequences = tokenizer.texts_to_sequences(token_sequences)

sequence_array = np.array(pad_sequences(num_sequences, padding='pre'))

vocab_size = len(tokenizer.word_index) + 1

seq_length = 20

model = Sequential()

model.add(Embedding(vocab_size, seq_length, input_length=seq_length))

model.add(LSTM(50, return_sequences=True))

model.add(LSTM(50))

model.add(Dense(50, activation='relu'))

model.add(Dense(vocab_size, activation='softmax'))

checkpoint = ModelCheckpoint(path, monitor='loss', verbose=1,

save_best_only=True, mode='min')

model.compile(loss='categorical_crossentropy', optimizer='adam',

metrics=['accuracy'])Code language: Python (python)The model took ~6 hours to train on my Dual-Core i5 Macbook Air and had an accuracy of 39%. Honestly, I am impressed with my not-optimized-for-heavy-calculations laptop!

Epoch 98/100

196659/196659 [==============================] - 169s 858us/step -

loss: 3.1610 - accuracy: 0.391

Epoch 99/100

196659/196659 [==============================] - 169s 858us/step -

loss: 3.1533 - accuracy: 0.3937

Epoch 100/100

196659/196659 [==============================] - 168s 856us/step -

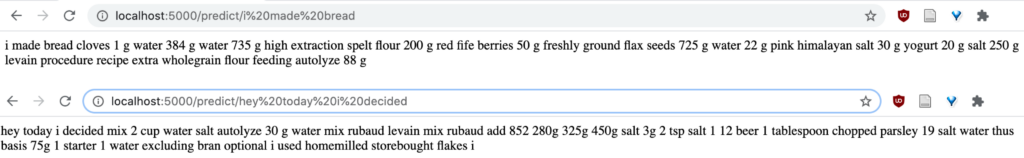

loss: 3.1489 - accuracy: 0.3935Once I had a trained model, I predicted new recipes by giving it the start of a new recipe along with the length of text to predict. After experimenting with different inputs, I found that the model really needs more training time to learn how to generate recipes. After 100 epochs, it produced legible bigrams and trigrams but not full sentences. It also doesn’t have a lot of data and hence, is prone to overfitting and could use some regularization. Additionally, different authors on The Fresh Loaf have different writing styles, and it is difficult to learn what the format of a recipe is when there are many ways to write one. The data exploration showed these differences, when looking at text lengths and the topics assigned to each recipe. Even with a systematic format, predicting recipes to actually use in the kitchen is not easy. Despite all these drawbacks, by supplying the model with the seed 'i made bread' and asking it to predict a 50-word long recipe, I obtained the following snippet.

i made bread cloves 1 g water 384 g water 735 g high extraction spelt flour 200 g red fife berries 50 g freshly ground flax seeds 725 g water 22 g pink himalayan salt 30 g yogurt 20 g salt 250 g levain procedure recipe extra wholegrain flour feeding autolyze 88 g

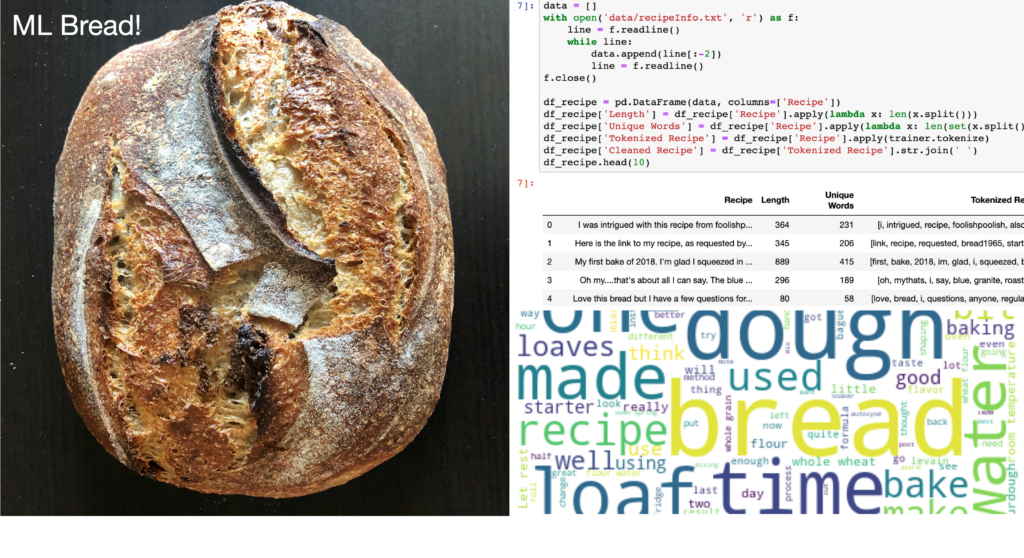

This actually contains a lot of useful information (underlined and bolded), and coupled with my experience baking bread, I was able to translate this into ML-inspired loaves of bread! I’ll admit, 50g ground flax seeds are a little much for my taste, but I really like the aroma of cloves + the addition of yogurt and will continue to do that in the future.

To finish this project off, I built a Flask container to be able to call the ML predictor endpoint on a browser and see the resulting recipe based on the user’s input. This way, this model can be deployed and with improvements to the neural network (training on more data and/or for longer), one can actually build out this functionality to be accessible to others as well. I’m excited to see where it can go!

@app.route('/predict/<text>')

def predict(text):

seq_length = 20

pred_length = 50

predicted_text = generate_recipe.generate_sentence(trained_model,

tokenizer,

seq_length, text,

pred_length)

return predicted_textCode language: Python (python)Learnings

- Recipes have a lot of different formats based on the author! Looking at the recipe lengths itself gave me a good idea at how varied they can be. It may be more reasonable to find a website maintained only by one person or with a standardized form (see NYT Cooking) to build a dataset for training ML models.

- Topic modeling is a great way to get more insight into the salient features of a text-based dataset. In addition to understanding how the data is clustered, it can also be a way to label the data without too much manual labor, if the topics and words are well-separated and describe distinct classes.

- More data is always better. I only gathered 2MB of recipes, but with more data, I would be able to train a more accurate and predictive neural network. I know we all hear this all the time, but this project really taught me that having access to big data can help with better modeling.

- LSTMs are cool! There’s already more cutting-edge NLP tools available, like BERT and GPT-2, but for something I can train on my Macbook Air for a few hours and start retaining memory and context, LSTMs seem like a great neural net architecture to use.

With this, I accomplished my goal – to bake a loaf based on a neural network I trained on bread recipes. I learned a lot along the way and I hope anyone reading this does too. I enjoyed all the journeys this project took me on, and the ML-inspired bread I got to eat as a result 😀